If you're familiar with Kafka, you know it's a powerful, distributed streaming platform that enables you to build real-time data pipelines and streaming applications. But did you know that you can enhance Kafka's capabilities using clusters?

In this blog post, we'll take a closer look at Kafka clusters, their components, architecture, and how they can help improve the performance and scalability of your messaging system.

To get started, A Kafka cluster is a group of Kafka brokers (servers) that work together to handle the incoming and outgoing data streams for a Kafka system. Each broker is a separate process that runs on a different machine and communicates with other brokers through a high-speed, fault-tolerant network.

The primary purpose of a Kafka cluster is to allow for horizontal scalability, meaning that it can handle an increase in load by adding more brokers to the group rather than upgrading a single broker's hardware. This allows a Kafka cluster to take extremely high data throughput levels with very low latency.

What is Apache Kafka?

Apache Kafka is a distributed streaming platform that the Apache Software Foundation developed. It was designed to handle high volumes of data efficiently and in real time, making it a popular choice for building data pipelines and streaming applications.

At its core, Kafka is a messaging system that allows producers (i.e., data sources) to send messages to consumers (i.e., data sinks) in a distributed and fault-tolerant manner. It achieves this by using a pub-sub model, where producers publish notices to Kafka topics, and consumers subscribe to them to receive the messages.

Kafka stores all broadcast messages for a configurable amount of time, allowing consumers to process the data at their own pace and providing a buffer in case of temporary outages or failures.

One of the critical benefits of Kafka is its ability to handle high data throughput levels with very low latency. It does this using a distributed design, where the data is partitioned and replicated across multiple brokers (servers) in a Kafka cluster.

This allows the system to scale horizontally by adding more brokers as needed rather than relying on a single, monolithic broker that can become a bottleneck.

In addition to its distributed design, Kafka has several other features that make it a powerful and flexible platform. For example, it has strong durability and fault tolerance, as it stores all published messages on disk and replicates them to multiple brokers.

It also has built-in support for compression, which can help reduce the amount of data that needs to be transferred and stored.

Overall, Apache Kafka is a highly scalable, fault-tolerant, and durable platform that is well-suited for building real-time data pipelines and streaming applications. It has a strong developer community and is used by companies across various industries, including finance, e-commerce, and social media.

What are Kafka Clusters?

So, as explained earlier, A Kafka cluster is a group of Kafka brokers (servers) that work together to handle the incoming and outgoing data streams for a Kafka system. Each broker is a separate process that runs on a different machine and communicates with other brokers through a high-speed, fault-tolerant network.

The primary purpose of a Kafka cluster is to allow for horizontal scalability, meaning that it can handle an increase in load by adding more brokers to the group rather than upgrading a single broker's hardware. This allows a Kafka cluster to manage extremely high data throughput levels with very low latency.

One of the key benefits of using Kafka clusters is their ability to process and transmit large amounts of data quickly and efficiently. This makes them ideal for use cases such as real-time analytics, event-driven architectures, and log aggregation.

In addition to their high performance, Kafka clusters are also highly fault-tolerant. If a broker goes down, the data it was responsible for is automatically re-replicated to other brokers in the group, ensuring that there is no data loss or disruption to the system.

Setting up a Kafka cluster requires some planning and configuration, as you need to consider factors such as the number of brokers, the hardware resources of each broker, and the data retention policy. However, once a Kafka cluster is up and running, it can be a highly reliable and scalable platform for handling streaming data.

Kafka Clusters Architecture: 5 Major Components

The architecture of a Kafka cluster is centred around a few key components: topics, brokers, ZooKeeper, producers, and consumers. These components work together to form a distributed streaming platform that can handle high volumes of data with low latency and high reliability.

1) Topics

Topics are the core unit of data in a Kafka cluster. Producers publish messages, and consumers subscribe to topics to receive notifications. A topic is a named stream of data that is partitioned and replicated across multiple brokers in the cluster. Each partition is an ordered, immutable sequence of continually appended messages.

2) Brokers

Brokers are the servers that make up a Kafka cluster. They are responsible for storing and transmitting the messages within the group. Kafka uses a pub-sub model, where producers publish notices to Kafka topics, and consumers subscribe to these topics to receive the notifications.

Kafka stores all broadcast messages for a configurable amount of time, allowing consumers to process the data at their own pace and providing a buffer in case of temporary outages or failures.

3) ZooKeeper

ZooKeeper is a distributed coordination service that helps manage broker membership and leadership within a Kafka cluster. It ensures that the group runs smoothly and efficiently by assisting brokers in communicating with each other and electing leaders as needed.

4) Producers

Producers are the source of data in a Kafka cluster. They are responsible for publishing messages to Kafka topics. Producers can be any system or application that generates data and can send it to Kafka.

5) Consumers

Consumers are the destination for data in a Kafka cluster. They are responsible for subscribing to Kafka topics and processing the messages that are published to them.

Consumers can be any system or application that needs to process data from Kafka, such as a data storage system, a real-time analytics platform, or a machine learning model.

Managing Kafka Clusters

Managing a Kafka cluster can be complex, but it can be done quickly with the right approach and tools. One of the critical components of managing a Kafka cluster is monitoring the group to ensure it runs smoothly and efficiently. This can be done using built-in metrics, third-party tools, or custom monitoring solutions.

By monitoring the cluster, you can identify potential bottlenecks and performance issues before they become critical and take action to optimize the cluster's performance.

Another critical aspect of managing a Kafka cluster is keeping the data backed up and protected. This can be done by implementing a robust backup and recovery strategy, which includes regular backups and the ability to quickly restore data streams in the event of a failure.

Additionally, it is essential to secure data in transit and at rest. This can be done by implementing proper authentication, authorisation, and encryption.

One of the most important things to consider when managing a Kafka cluster is the ability to scale the group as the amount of data grows. This is important to ensure that the collection can handle the increased load while ensuring that the system remains responsive.

Properly scaling a Kafka cluster involves monitoring resources, such as CPU and memory, and adjusting the cluster size and configuration as needed.

Managing a Kafka cluster also involves dealing with capacity issues. This can be done by monitoring the resources used by the group and adjusting the cluster size and configuration as needed. It also involves finding a balance between the number of consumers, producers, and brokers in the cluster.

Deploying Kafka Clusters

Deploying a Kafka cluster can be daunting, but it can be done quickly with the right approach and tools. There are several options for deploying a Kafka cluster, each with its own benefits and drawbacks. One of the most common options is using a managed service.

This option allows you to leverage the resources of a cloud-based provider to deploy and manage your Kafka cluster without needing additional hardware or software. This can be a cost-effective option, as it eliminates the need for upfront investment in hardware and can provide more accessible scaling options.

Another option for deploying a Kafka cluster is on-premises. This option allows you to deploy your group on your hardware and infrastructure, giving you more control over your data and the ability to keep your data within your network. However, this option may require a more significant upfront investment in hardware and additional IT resources for deployment and management.

Finally, consider deploying your Kafka cluster on a cloud-based platform, such as Amazon Web Services or Microsoft Azure. This option can provide the benefits of both managed services and on-premises deployments, with added scalability and cost-effectiveness.

Additionally, cloud-based implementations can give great flexibility and control over your resources without needing significant upfront investment.

In addition to the deployment options, it's also essential to consider the available tools and frameworks that can help you with cluster management, scaling, and monitoring. This can include open-source tools like Apache Ambari or Confluent Platform and commercial solutions like Datadog, Grafana, or Splunk.

The key to a successful deployment is evaluating the options available, considering your specific use case, and selecting the option that best fits your organisation's needs. By doing so, you can ensure that your Kafka cluster is deployed quickly, easily, and efficiently.

To conclude all that is stated, deploying a Kafka cluster can be a tricky task, but by selecting the suitable deployment options based on your needs and by using the right tools for management, scaling, and monitoring, you can ensure successful deployment and smooth operations after that.

Kafka Cluster Monitoring and Metrics

Cluster monitoring and metrics are essential for understanding the health and performance of your Kafka cluster. By monitoring the group, you can identify potential bottlenecks and performance issues before they become critical and take action to optimise the cluster's performance.

Several built-in metrics available in Kafka can be used to monitor the cluster, such as message and byte rate, replication lag, and CPU usage. These metrics can be accessed through Kafka's command-line tools or third-party monitoring tools such as Prometheus, Grafana, and Datadog.

In addition to built-in metrics, it's also essential to monitor the resources used by the cluster, such as CPU, memory, and disk usage. This information can help you understand the cluster's performance and capacity and identify when the group needs to be scaled or reconfigured.

One of the best ways to monitor and track these metrics is by using a centralised monitoring platform such as Grafana and integrating it with a time series database like InfluxDB or Prometheus. This can provide a real-time view of the metrics and help you to identify patterns and trends in the data.

By monitoring the cluster regularly and tracking metrics, you can create alerts and set thresholds to be notified when an anomaly occurs. This can help you quickly identify and resolve issues and ensure that your Kafka cluster is always performing optimally.

So monitoring and metrics play a vital role in the success of a Kafka cluster. By keeping an eye on the metrics, you can anticipate and prevent issues and ensure that the group runs at peak performance.

Using the right tools allows you to monitor and track the metrics easily, thus enabling you to make informed decisions about optimizing your cluster for optimal performance and scalability.

Kafka Cluster Security

When it comes to Kafka Clusters, security is of paramount importance. After all, a Kafka cluster typically handles sensitive data streams, and it is crucial to protect that data from unauthorized access or tampering.

There are several critical areas of security to consider when working with a Kafka cluster, including authentication, authorization, and encryption.

Authentication is the process of verifying the identity of users or clients that are connecting to the Kafka cluster. This can be done using various methods, such as username and password or certificate-based authentication.

By ensuring that only authorized users can access the group, you can reduce the risk of unauthorized access to your data streams.

Authorization is controlling access to resources and data within the Kafka cluster. This can be done by defining roles and permissions for users and groups and ensuring they can only access the data and resources they are authorized to access. This can help to prevent data leaks and unauthorized access to sensitive data streams.

Encryption is the process of protecting data streams as they are transmitted across the network. This can be done by using Transport Layer Security (TLS) or Secure Sockets Layer (SSL) to encrypt data streams. By encrypting data streams, you can ensure that even if the data is intercepted, it will be unreadable to unauthorized users.

Another vital aspect to consider is to secure the data at rest. This can be done using disk encryption and access control mechanisms to protect the data from unauthorized access.

Additionally, it's also essential to stay informed about security best practices and industry standards for Kafka clusters. This can include staying knowledgeable about new security vulnerabilities and patches and testing your set regularly for security vulnerabilities.

Hence, securing a Kafka cluster is a multifaceted task. Still, by following best practices, staying informed about the latest security developments, and implementing the proper security measures, you can ensure that your Kafka cluster is well-protected and that your data streams are safe from unauthorized access or tampering.

Three benefits of a monolithic Kafka Cluster

1) A Global Event Hub

A monolithic Kafka cluster serves as a global event hub for your organisation. It provides a single point of ingress and egress for all your streaming data, making integrating various systems and applications more accessible.

This can reduce the complexity of your data architecture and streamline the flow of data within your organisation.

2) No Technical Constraints

Another benefit of a monolithic Kafka cluster is that it removes technical constraints on your data streams. With a monolithic group, you can scale up or down as needed without worrying about the limitations of individual brokers or the cost of adding more hardware.

This can be especially useful if you have varying data volume levels or need to handle traffic spikes.

3) A Single Kafka Cluster is Cheaper

A single monolithic Kafka cluster can be more cost-effective than multiple smaller clusters. Using a single group can take advantage of economies of scale and reduce the overhead of managing various collections.

This can be especially important for organisations with limited resources or tight budgets.

Thus, a monolithic Kafka cluster can provide several benefits, including serving as a global event hub, removing technical constraints on your data streams, and being more cost-effective than using multiple smaller clusters.

Whether you're building a real-time data pipeline or a streaming application, a monolithic Kafka cluster can be valuable to your toolkit.

Benefits and challenges of multiple Kafka Clusters

Using multiple Kafka clusters can offer several benefits for organisations with complex data architectures or high data volume levels. Some key benefits of various Kafka clusters include increased scalability, improved fault tolerance, and the ability to isolate different data types.

A) Increased scalability

It is one of the primary advantages of using multiple Kafka clusters. By dividing your data streams into separate groups, you can scale each group independently to meet the specific needs of that data stream.

This can be especially useful if you have different types of data with varying scalability requirements or if you need to handle spikes in traffic for specific data streams.

B) Improved fault tolerance

It is another benefit of using multiple Kafka clusters. If one set goes down, the data streams it was responsible for can be automatically routed to another location, ensuring no data loss or disruption to the system. This can be especially important for mission-critical applications that need to be highly available.

C) Isolating different data types

By separating other data streams into separate sets, you can ensure that each group is optimized for the specific needs of that data stream.

For example, you could create a separate cluster for high-volume data streams, a separate cluster for sensitive data streams, etc. This can help improve the performance and security of your data architecture.

While there are many benefits to using multiple Kafka clusters, there are also some challenges to consider. One challenge is the increased complexity of managing various sets.

You will need to ensure that each group is configured correctly and maintained, which can be time-consuming and resource-intensive. You will also need to develop strategies for routing data between clusters and handling data consistency between sets.

Another challenge is the potential cost of using multiple Kafka clusters. While the economies of scale of a single large group can be attractive, the cost of maintaining and scaling multiple smaller sets can add up quickly. You must consider your budget and resource constraints carefully when using various Kafka clusters.

1) Operational Concerns of Decoupling

A) Segregated Workloads Facilitate Operational Tasks

One of the main benefits of using separate Kafka clusters to segment your data streams is facilitating operational tasks such as maintenance, upgrades, and monitoring.

By dividing your data streams into separate sets, you can more easily manage and maintain each cluster independently, which can help improve the reliability and performance of your data architecture.

For example, if you need to perform maintenance on one cluster, you can do so without affecting the other clusters. This can reduce downtime and minimise disruptions to your data streams.

Similarly, if you need to upgrade one set, you can do so without impacting the other clusters, significantly reducing the downtime and effort required for upgrades.

Segregated workloads can also make monitoring and troubleshooting issues within your data architecture easier. By dividing your data streams into separate clusters, you can more easily isolate and identify any issues that may arise.

This can help you resolve problems more quickly and prevent them from spreading to other parts of your data architecture.

In addition to these benefits, segregated workloads can make it easier to apply different configurations or policies to other data streams.

For example, you could apply additional security measures to sensitive data streams or different retention policies for various data types. By using separate Kafka clusters, you can more easily apply these different configurations or approaches to the appropriate data streams.

To conclude, segregated workloads can greatly facilitate operational tasks such as maintenance, upgrades, and monitoring, which can help improve the reliability and performance of your data architecture.

Whether you're building a real-time data pipeline or a streaming application, using separate Kafka clusters can be a valuable tool for managing your data streams and ensuring that your data architecture runs smoothly.

B) Faster Upgrades with Separate Kafka Clusters

One of the primary advantages of using separate Kafka clusters to segment your data streams is the ability to perform faster upgrades. If you have a large, monolithic Kafka cluster, upgrading the entire group can be time-consuming and resource-intensive.

By using separate Kafka clusters, you can upgrade each set independently, significantly reducing the downtime and effort required for upgrades.

For example, let's say you have a monolithic Kafka cluster with three data streams: streams A, B, and C. If you want to upgrade the set, you will need to shut down the entire cluster, perform the upgrade, and then bring the group back online. This can take significant time and considerable downtime for your data streams.

On the other hand, if you use separate Kafka clusters for each data stream, you can upgrade each cluster independently. For example, you could upgrade stream A while streams B and C usually operate.

This can significantly reduce the downtime and effort required for upgrades and can help improve the overall reliability of your data architecture.

Another benefit of using separate Kafka clusters for upgrades is the ability to test new versions or configurations on a smaller scale before rolling them out to the entire system.

This can help you identify and resolve any issues before they affect your entire data architecture, which can help reduce the risk of downtime or other disruptions.

Using separate Kafka clusters for your data streams can provide several benefits regarding upgrades. By upgrading each set independently, you can significantly reduce the downtime and effort required for promotions and more easily test new versions or configurations before rolling them out to the entire system.

Whether you're building a real-time data pipeline or a streaming application, using separate Kafka clusters can be a valuable tool for managing your data streams and ensuring that your data architecture runs smoothly.

C) Regulatory Compliance Issues

Regulatory compliance can be a significant concern regarding your data architecture if you handle sensitive or regulated data. Depending on the types of data you are taking and the industries you operate in, you may need to adhere to specific regulatory standards related to data privacy, security, and retention.

Using separate Kafka clusters can help you better manage your data and ensure that you comply with these regulations.

For example, let's say you are handling a large bank's sensitive financial data. You may need to ensure that this data is stored and transmitted securely, in compliance with relevant regulatory standards such as the Payment Card Industry Data Security Standard (PCI DSS).

By using separate Kafka clusters to handle this data, you can more easily apply security measures and policies to ensure compliance.

Similarly, if you are handling healthcare data, you may need to comply with regulatory standards such as the Health Insurance Portability and Accountability Act (HIPAA). Using separate Kafka clusters can help you better manage and secure this data, ensuring that it is handled promptly.

Using separate Kafka clusters can also help you more easily apply different retention policies to varying data types. For example, you might need to retain certain types of data for extended periods due to regulatory requirements. In contrast, other data types can be deleted after a shorter period.

By using separate Kafka clusters, you can more easily apply the appropriate retention policies to each data stream.

That's why regulatory compliance is essential when using separate Kafka clusters. By dividing your data streams into different sets, you can more easily manage and secure your data, ensuring that you comply with relevant regulatory standards.

Whether you're building a real-time data pipeline or a streaming application, using separate Kafka clusters can be a valuable tool for managing your data streams and ensuring that your data architecture runs smoothly.

2) The Challenges of Tenant Isolation

A) Resource Isolation

One of tenant isolation's main challenges is ensuring that each tenant's resources are adequately isolated. This can be especially important if you use shared resources, such as servers or storage devices, to host multiple Kafka clusters.

You will need to ensure that the help of one tenant is not being overused or impacted by the resources of another tenant.

B) Security and Access Issues

Another challenge of tenant isolation is ensuring that each tenant's security and access controls are correctly enforced.

This can be especially important if you handle sensitive or regulated data, as you must ensure that only authorized users can access the data. You will also need to ensure that the data of one tenant is not accessible to other tenants.

C) Logical Decoupling

A third challenge of tenant isolation is the need to ensure that the data streams of each tenant are logically decoupled from one another.

This can be especially important if you are using the same Kafka cluster for multiple tenants, as you will need to ensure that the data streams are properly separated and that there is no overlap between them.

3) Reasons for Segregating Kafka Clusters

A) Data Locales

One reason for segregating Kafka clusters is to handle data generated or used in different geographic locations. For example, data streams are generated in Europe, Asia, and North America.

In that case, you could create separate Kafka clusters for each region to minimise latency and ensure that the data is stored and processed as close to the source.

B) Fine-Tuning System Parameters

Another reason for segregating Kafka clusters is to fine-tune the performance and configuration of each set to meet the specific needs of the data streams it is handling.

For example, you could create separate clusters for high-volume data streams, low-volume data streams, or data streams with different performance or security requirements. By fine-tuning the configuration of each set, you can optimise the performance of your data architecture.

C) Domain Ownership

A third reason for segregating Kafka clusters is to clarify ownership and responsibilities within your organisation. By creating separate sets for different teams or departments, you can more easily assign ownership and responsibility for each cluster and ensure each team has the resources it needs to manage its data streams.

D) Purpose-Built Clusters

Finally create separate Kafka clusters for specific purposes or applications. For example, you might create a different group for a real-time data pipeline, a particular set for a streaming analytics application, etc. By creating purpose-built sets, you can ensure that each stage is optimised for the specific needs of the application it supports.

Overall, there are many reasons for segregating Kafka clusters, including data locales, fine-tuning, system parameters, domain ownership, and purpose-built sets. By carefully considering these factors, you can ensure that your data architecture is optimised for your specific needs and requirements.

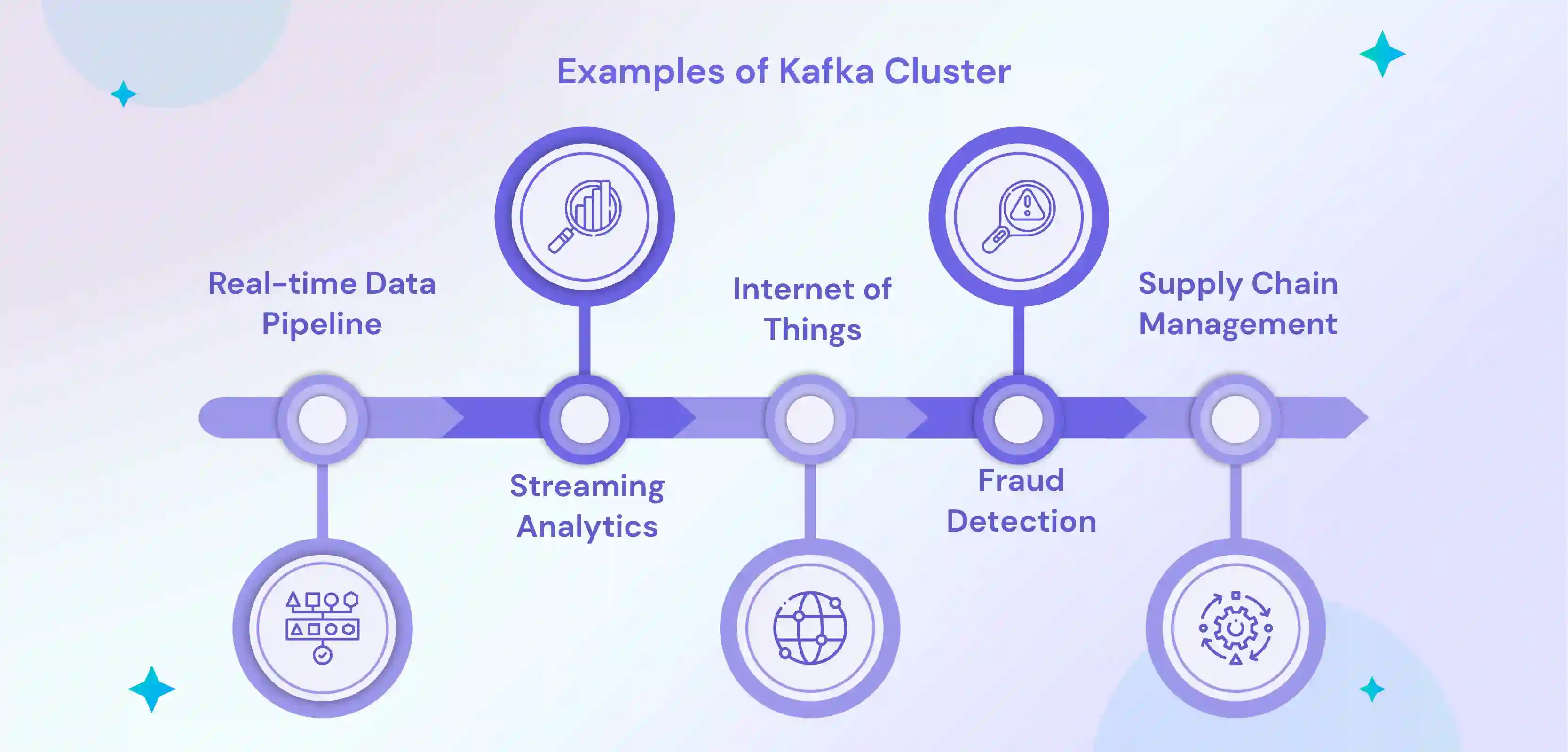

Examples of Kafka Cluster

Here are a few examples of Kafka clusters:

1) Real-time data pipeline:

One everyday use case for Kafka clusters is a real-time data pipeline. In this scenario, Kafka clusters can be used to ingest, process, and transmit large volumes of data in near real-time.

For example, a financial institution might use Kafka clusters to ingest and process stock ticker, transaction, or market data in real time, enabling real-time analytics and decision-making.

2) Streaming analytics:

Another everyday use case for Kafka clusters is as a platform for streaming analytics. In this scenario, Kafka clusters can be used to ingest, process, and transmit data streams in real-time, enabling real-time analytics and visualisation of the data.

For example, a marketing firm might use Kafka clusters to ingest and process data streams from social media platforms, allowing it to analyse trends and patterns in real-time.

3) Internet of Things (IoT):

Kafka clusters can also be used in the Internet of Things (IoT) context. Kafka clusters can be used in this scenario to ingest and process data streams from IoT devices, such as sensors or smart appliances.

For example, a manufacturer might use Kafka clusters to ingest and process data streams from manufacturing equipment, enabling real-time monitoring and maintenance of the equipment.

4) Fraud detection:

Kafka clusters can also be used for fraud detection. In this scenario, Kafka clusters can be used to ingest and process data streams from financial transactions, enabling real-time analysis and detection of fraudulent activity.

For example, a bank might use Kafka clusters to ingest and process data streams from credit card transactions, allowing it to identify and prevent fraudulent activity in real-time.

5) Supply chain management:

Kafka clusters can also be used in supply chain management. Kafka clusters can be used in this scenario to ingest and process data streams from various sources, such as sensors, logistics, or ERP systems.

By analysing these data streams in real-time, organisations can gain a more accurate and up-to-date view of their supply chain, optimising their operations, reducing costs, and improving customer service.

For example, a retail company might use Kafka clusters to ingest and process data streams from its distribution centres, allowing it to forecast demand more accurately and optimise its inventory management.

Similarly, a transportation company might use Kafka clusters to ingest and process data streams from its fleet of vehicles, enabling it to optimise routes, reduce fuel consumption, and improve maintenance schedules.

Conclusion

To conclude, Kafka clusters can be a powerful tool for building real-time data architectures. Whether you are making a real-time data pipeline, a streaming analytics application, or a platform for the Internet of Things, Kafka clusters can help you ingest, process, and transmit large volumes of data in near real-time.

By segmenting your data streams into separate Kafka clusters, you can gain several benefits, including the ability to manage and maintain each group independently, the ability to apply different configurations or policies to other data streams, and the ability to optimize the performance and design of each cluster for the specific needs of the data it is handling.

However, there are also challenges when using separate Kafka clusters, including resource isolation, security and access issues, and logical decoupling. By addressing these challenges, you can ensure that your data architecture runs smoothly and efficiently.

drives valuable insights

Organize your big data operations with a free forever plan

An agentic platform revolutionizing workflow management and automation through AI-driven solutions. It enables seamless tool integration, real-time decision-making, and enhanced productivity

Here’s what we do in the meeting:

- Experience Boltic's features firsthand.

- Learn how to automate your data workflows.

- Get answers to your specific questions.

.avif)

.avif)