To put it across in a simplistic statement, “Kafta Consumer” poses like a mailman. It takes care of the exchange of messages from Kafta to other destinations like a website or an application. The software or tool allows one to process and evaluate real-time data streams.

Usually, the Kafta Consumer is used for many things like data warehousing, data integration, data analytics, or evaluation, as I mentioned earlier. But, in various other instances, companies use it for other purposes that are hard to define as it changes per context and as er end-user usage.

Companies use Kafta Consumer for real-time decision-making and declaration of analytic results. They can do this because of their real-time live data streaming capability. This information is obtained from a variety of sources, including databases, message queues, and other online services.

Prerequisites

To use the Kafka Consumer, a few things must be understood first.

Foremost, The user should have a good grasp of topics and partitions for which it needs to consume data. They should know about the Kafka cluster and the broker nodes it is linked to. It should also have the required configuration to link and authenticate with the broker nodes.

Secondly, the user should be able to handle the bulk quantity of data efficiently and seamlessly. This includes being able to process messages asynchronously, scaling up or down depending on the load, and being resilient to failure.

Thirdly, the consumer should be able to detect and handle offsets appropriately. Offsets are used to track the position of the consumer in the topic and partition. The consumer should be able to detect and commit the current offset after processing the message.

Finally, the consumer should be able to serialise and deserialise messages according to the data format used by the producer. The consumer should be able to understand the data format and process the messages accordingly.

One needs to understand these prerequisites of Kafka Consumer before using it. It is important to understand the topics, partitions and offsets to use Kafka successfully.

What is Kafka?

In short, Kafka is an open-source stream-processing software platform developed by the company Apache Software Foundation. The software is used for generating real-time data routes and real-time streaming applications.

The design structure of Kafka enables the software to be highly scalable. With support for both single-machine and distributed architectures, it becomes highly customisable. It is also built bug tolerant, meaning that it can continue to operate even if a system component of the software fails.

Kafka is widely used in companies for a variety of tasks, such as collecting and analysing data and providing real-time streaming data services. Kafka is used to recording and process data in real time, and the platform is designed to handle high throughput and availability.

What is its Architecture?

Kafka’s architecture is built in a certain way to address the large volume of data streaming. The architecture of Kafka is designed for seamless data transfers between various applications and their components.

Initially, at its birth, Kafka was a LinkedIn Baby. They had developed this as an internal messaging system, but later the popularity of it fetched wide attention, which led it to be adopted worldwide by various companies.

Kafka is made up of several components or elements, these components are the broker, producer, consumer, and Zookeeper.

The broker takes care of recording and managing messages. It handles the recording and distribution of data across multiple nodes in the nodal network of a cluster.

The producer takes care of publishing or exchanging messages with the broker, while the consumer is responsible for consuming messages from the broker.

The zookeeper takes care of managing the cluster and maintaining the configuration of the Kafka cluster.

As explained earlier, the high scalability of Kafta allows a larger number of producers and consumers to interact with the platform. It is also highly fault-tolerant, meaning that if a node fails, the other nodes in the cluster can continue to process data without interruption.

Additionally, Kafka is highly configurable, allowing users to customise their data streams' throughput, reliability, and latency.

Overall, Kafka's architecture is a powerful and versatile platform for streaming data. It is ideal for organisations looking to build reliable, scalable, and secure data pipelines.

Who are the Producers of it?

As briefed earlier, the producers component in Kafka takes care of publishing or exchanging messages with the broker. It is the first step in the communication process, as the producer will create a message and send it to the Kafka broker.

The producer is responsible for the message delivery in Kafka. It can be configured to use different delivery semantics, such as at least once or exactly once delivery. It can also control the ordering of messages or use compression to reduce the size of the messages sent to the broker.

The producer also accounts for keeping track of the topics to which it sends messages. This allows the producer to keep track of the offsets for each topic and to know when it should send new messages to the broker.

Along with the above-mentioned details, the producer is also responsible for buffering messages before sending them to the broker. This allows the producer to batch messages together, which can improve performance.

Who are the Consumers of it?

Consumers of Kafka are the entities that consume messages from Kafka topics. These consumers can be either individuals or applications.

Individuals are usually the end users that are interested in the messages that the Kafka brokers are sending. They can use either Kafka-native tools or 3rd party applications that connect to the Kafka brokers to consume the messages. Individuals may be interested in the messages for various reasons such as obtaining data for analytics, monitoring, or other purposes.

What are Kafka Consumers?

Kafka Consumers are applications that subscribe to topics or subjects and process the real-time stream or pool of data records produced by them. They are the systems or applications that connect to nodes of Kafka clusters to consume the published messages by producers.

Consumers can be grouped into consumer groups, which allows a pool of processes to divide the work of consuming and processing records published on a topic. This makes it easier to scale applications that process data from Kafka topics.

Kafka Consumers are designed to be highly scalable and reliable. They can be configured to automatically commit offsets and can be configured to process multiple topics in parallel.

Building Kafka Consumers

The Kafka Consumers program helps companies to streamline data processing and enable real-time analytics. It is crafted to fetch messages from a Kafka topic. It can process the messages and store them in a database or pass them to another system for further processing.

Kafka Consumers are built using Java or Scala and are designed to be highly available and bug-tolerant. To create a Kafka Consumer, you need to define the topic from which it will be feeding and the type of message it will take in. Consumers can be configured to read from multiple topics and process messages in batches or in real time.

In the system, once you have defined the topic from where the messages are to be taken in, you can configure the Consumer to read from the topic and process the message. The workflow of Kafka Consumers can be configured or programmed in a way to automatically execute their offsets, which can help to confirm that messages are not processed more than once.

Kafka Consumers can also be programmed to handle message errors and retries. For example, when a Consumer fails to process a message, it can be programmed to retry that message on a loop a certain number of times before discarding it.

Kafka Consumers are essential to any data processing system and should be carefully configured and monitored to ensure optimal performance. They provide an efficient way to process messages and enable real-time analytics.

1) Setting up Kafka Cluster

To be blunt, setting up a Kafka Cluster can be daunting for someone new to the technology.

However, with the right set of approaches, guidance, and knowledge, it can be a fairly concise process. In a Kafka cluster, there are numerous brokers, each with its unique arrangement or programming. The first step is to decide how many brokers you need and which nodes they should be hosted on in the nodal network.

The next step is configuring the Kafka brokers with the right settings such as the broker id, advertised hostname, port, and log directories. Once these settings are programmed, one will need to create a topic that will be used for communication between the brokers.

Then, the brokers will have to be connected to exchange messages across the Kafta cluster.

Finally, you will need to set up security settings such as user and group rights, SSL/TLS, and authentication settings. After all of these settings have been configured, you will be ready to start producing and consuming messages from your Kafka cluster.

2) Creating a Kafka Topic

Creating a Kafka topic is pretty easy. This is a walk on the cake if you pulled through until this setup stage! So, first, you must create a Kafka cluster, a group of computers that work together to provide a distributed messaging system. Then, once the cluster is up and running, you can create a topic.

In the process, you will be prompted to specify the number of partitions and an imaging factor. The number of partitions defines how the messages in the topic will be allocated, while the imaging factor defines the number of copies of each message that will be stored.

Once the topic is created, it can be used to publish and subscribe to messages.

3) Configuring the Kafka Consumer Application

The Kafka consumer applications require careful programming and workflow to ensure they are set up correctly and can receive messages from Kafka nodal network or cluster. The configuration or programming steps include setting up the consumer group, setting the consumer offset, and configuring the consumer deserialisation.

Firstly, the consumer group needs to be configured in the system. This can be done by assigning a group ID to the application in the system. This group ID can be used to identify the consumer group and any messages sent to the group.

The consumer offset also needs to be set up. This can also be done by specifying the starting position in the topic from which the consumer will start consuming. This can be set to either “latest” or “earliest”.

Next, after that, consumer deserialisation must be programmed. This is done by providing a class that implements the Deserializer interface. This class is responsible for converting the incoming binary payload into the desired type.

The deserialiser class then needs to be registered with the Kafka Consumer so it can be used to deserialise incoming message feeds.

4) Compiling and Running the Consumer Application

Compiling and running the Kafka Consumer application is an important step in using the Apache Kafka platform. To commence, you must first download the latest version of Kafka from the official website of Apache.

Once the download is complete, you must unpack the downloaded file into a directory using the applications like WinZip or 7Zip. The next step is to compile the Kafka Consumer application.

This can be done by navigating to the Kafka directory and running the command line ‘bin/Kafka-run-class.sh org. Apache. Kafka. Tools. Doing this with the administrator’s permission would be the most ideal thing to do. ConsumerTool’. This will then compile the Kafka Consumer application.

Then, once the compilation of the application is complete, you can start running the Kafka Consumer application. This you can do by navigating to the Kafka directory and running the command line ‘bin/Kafka-console-consumer.sh –bootstrap-server localhost:9092 – topic test’ with administrator’s permission.

This will launch the Kafka Consumer application and it will begin to consume messages from the topic ‘test’.

Finally, you can monitor or review the Kafka Consumer application to ensure that it is running correctly. This you can do by using the command line ‘bin/Kafka-consumer-groups.sh –bootstrap-server localhost:9092 –describe –group test’.

This will display the status of the Kafka Consumer application, such as the number of messages consumed, the throughput rate, and the lag.

Just follow these steps mentioned above, and you can successfully compile and launch the Kafka Consumer application. This will enable us to consume messages from the topic ‘test’ and monitor the performance of the Kafka Consumer.

Deploying Kafka Consumers

Deploying Kafka Consumers is an integral part of running a successful Kafka system. Consumers are responsible for consuming messages from topics and processing them according to the defined business logic. To deploy consumers successfully, a few crucial steps must be taken as a prerequisite.

First, the consumer needs to be created and programmed. This involves you setting up the consumer group, specifying the topics to fetch or feed on, and mentioning the message processing logic.

Once the consumer is created and programmed, it must be deployed or launched to the Kafka cluster. This requires launching the consumer code to the cluster and configuring the consumer to connect to the Kafka broker.

Finally, the consumer should be reviewed and managed on an ongoing basis in real time. This means one must be monitoring the consumer's performance and availability and ensure that the consumer can process messages.

Deploying Kafka Consumers is an important step in managing a Kafka system, and it is essential to have a well-planned and tested deployment process in place.

How is Kafka used for real-time streaming?

As we discussed earlier, by now you are aware of Kafka’s ability of real-time streaming. The ability is used for building real-time streaming data routes and application programs.

Kafka holds the ability to process streams of data bulks in real time and render them to other systems in a distributed cluster of architecture. It's more like a spider web with interconnected network structures with nodal clusters.

The system can also be used thus to process and analyse data from IoT devices and other sources. Kafka can be used to store large volumes of data and make them available for processing. Kafka is also used for building real-time streaming applications that can process and analyse data in real time and deliver insights.

Kafka is highly scalable and fault-tolerant and can be used in distributed architectures. It is also used to process data from multiple sources and deliver them to other systems in a reliable and timely manner. Kafka is a popular choice for real-time streaming because of its scalability and performance.

A) Extraction of Data Into Kafka

Data extraction into Kafka is a process of collecting, transforming, and loading data from various sources into Kafka. It is a form of Extract, Transform, Load (ETL) process, where the data is extracted from various sources like databases, web services, files, etc., then transformed into a format suitable for loading into Kafka and finally loaded into Kafka.

Kafka makes it easy to store and access data in an organised and efficient manner. The process of data extraction into Kafka is beneficial for businesses as it eliminates the need for manual data entry, reducing the risk of errors.

Furthermore, the integration of Kafka with other systems like Apache Spark and Hadoop can further increase the efficiency of data extraction. Data extraction into Kafka also helps improve the speed of data analysis and reporting as it reduces the time taken to access the data from various sources.

B) Transformation of the Data Using Kafka Streams API

Kafka Streams API is a powerful tool for transforming data. It allows you to process streaming data in real time and build applications that can react quickly to changes in the data.

With Kafka Streams, you can easily create a stream of data from a Kafka topic, filter that data, join data from multiple topics, aggregate data, or apply custom transforms to the data.

You can also use the API to create a unified view of all the data in your system. This makes it easy to build applications that react quickly to changes in the data and take advantage of the most up-to-date data.

C) Downstream of the Data

Kafka Consumers are very significant components of any enterprise data route or downstream of data. The downstream data through Kafka Consumers works in a certain distributed manner.

The process is basically an application or a set of applications or services that can be used to process or analyse the data further for generating reports and other analytical representations.

This downstream processing can be done using custom code or using existing frameworks. This allows developers to quickly process the data and make use of its related applications.

What are the Use Cases?

Kafka is a distributed streaming platform for building real-time data pipelines and streaming applications. It is widely used for various use cases, including log aggregation, metrics collection, real-time data processing, and event-driven architectures.

Kafka is suitable for scenarios where high throughput, low latency, and fault tolerance are required.

Some of Kafka's most common use cases are log aggregation, event streaming, and website activity tracking. Log aggregation is a great use case for Kafka because it can process large volumes of data quickly and reliably.

1) Messaging

Kafka is a powerful messaging system that is becoming increasingly popular for use cases in messaging. Kafka is a distributed system allowing fast, reliable messaging between applications and services. It is highly scalable, highly available, and fault-tolerant, making it an ideal choice for messaging applications.

Kafka is particularly well-suited for messaging applications that need to process data in real time. It can be used to store and process data from a variety of sources, including sensors, applications, and databases.

Kafka can process the data in parallel, allowing for the high-speed processing of large amounts of data. It can also handle large volumes of data, meaning that it can be used for applications with large data sets.

2) Log Aggregation

Kafka is becoming an increasingly popular choice for log aggregation. With Kafka, organisations can easily collect, store and analyse log data from multiple sources in one place. As a distributed streaming platform, Kafka is known for its scalability and fault tolerance, which make it an ideal choice for log aggregation.

Kafka can ingest large amounts of data in real time, making it ideal for log aggregation. Kafka also provides a distributed storage layer, which makes it easy to store large amounts of log data in a way that can be efficiently retrieved and analysed.

3) Metrics

Kafka is a powerful tool for gathering and analysing metrics, making it an excellent choice for data-driven decision-making. Kafka provides real-time streaming data, allowing for the collection of data from multiple sources, such as weblogs, user activity, and system performance.

This data can then be analysed to gain insights into user behaviour, system performance, and more.

Kafka also allows for storing data for later analysis, allowing for more in-depth analysis. Furthermore, Kafka can be integrated with other data sources, such as Hadoop or Apache Spark, to enable more complex analyses. All of these features make Kafka an ideal choice for metrics collection and analysis.

4) Commit Logs

Commit Logs in Kafka can be used to store, process, and analyze data from multiple sources, making it an ideal tool for logging and tracking application performance. Kafka is a distributed, fault-tolerant, and scalable system, which makes it an ideal choice for logging and tracking application performance.

With Kafka, developers can easily view real-time data streaming from multiple sources and make decisions about how to improve their applications.

Optimisations for real-time data with Kafka

Real-time data processing is becoming increasingly important for businesses as they look to process data quickly to gain valuable insights. Kafka is one of the most popular solutions for real-time data processing due to its scalability, fault tolerance, and support for streaming data.

To get the most out of Kafka, it's important to understand the various optimisation techniques that can be used to improve performance.

One of the most important optimisations is ensuring that you use the right number of Kafka brokers. Increasing the number of brokers can help improve throughput and scalability and also increase latency and costs. Therefore, it's important to monitor your system to ensure that you are using the optimal number of brokers.

Additionally, you should also consider using sharding to distribute the data load evenly across multiple brokers. This can help to improve performance and reduce latency. Replication can also help to improve fault tolerance and scalability.

Finally, optimising the Kafka producer and consumer settings is also essential. For example, by increasing the number of partitions, you can improve performance, as it allows more data to be processed in parallel.

It's also important to configure the buffer size settings to ensure that messages are not lost due to the consumer being unable to process them quickly enough.

By following these optimisations, you can ensure that your Kafka system can handle real-time data processing efficiently. This will help you gain valuable insights quickly and improve scalability, fault tolerance, and overall performance.

Conclusion

The Kafka Consumer is an effective tool for helping businesses gain insight into their data and better understand their customers. It can collect and analyse data from various sources, including site logs and social media, and is essential to any data-driven enterprise.

The Kafka Consumer is also highly scalable and dependable, making it an excellent candidate for use in a production setting. Companies may rapidly build and install the Kafka Consumer to gain access to their data and use its many features, thanks to its simple APIs.

In conclusion, the Kafka Consumer is a versatile and effective solution that may assist businesses in better managing their data and maximising their consumer insights.

drives valuable insights

Organize your big data operations with a free forever plan

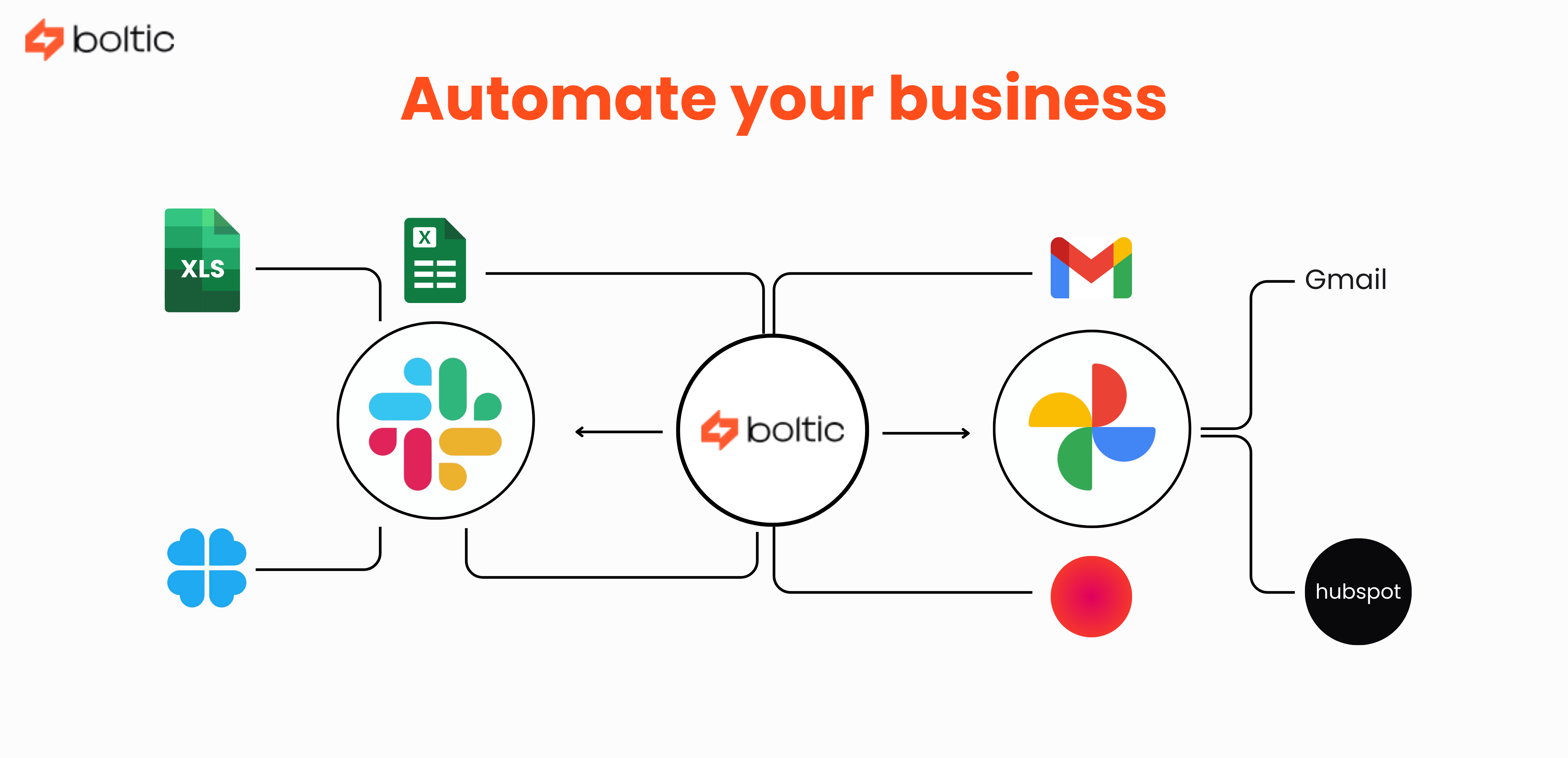

An agentic platform revolutionizing workflow management and automation through AI-driven solutions. It enables seamless tool integration, real-time decision-making, and enhanced productivity

Here’s what we do in the meeting:

- Experience Boltic's features firsthand.

- Learn how to automate your data workflows.

- Get answers to your specific questions.

.avif)